Multi-GPU distributed deep learning training at scale with Ubuntu18 DLAMI, EFA on P3dn instances, and Amazon FSx for Lustre | AWS Machine Learning Blog

Multi-GPU and distributed training using Horovod in Amazon SageMaker Pipe mode | AWS Machine Learning Blog

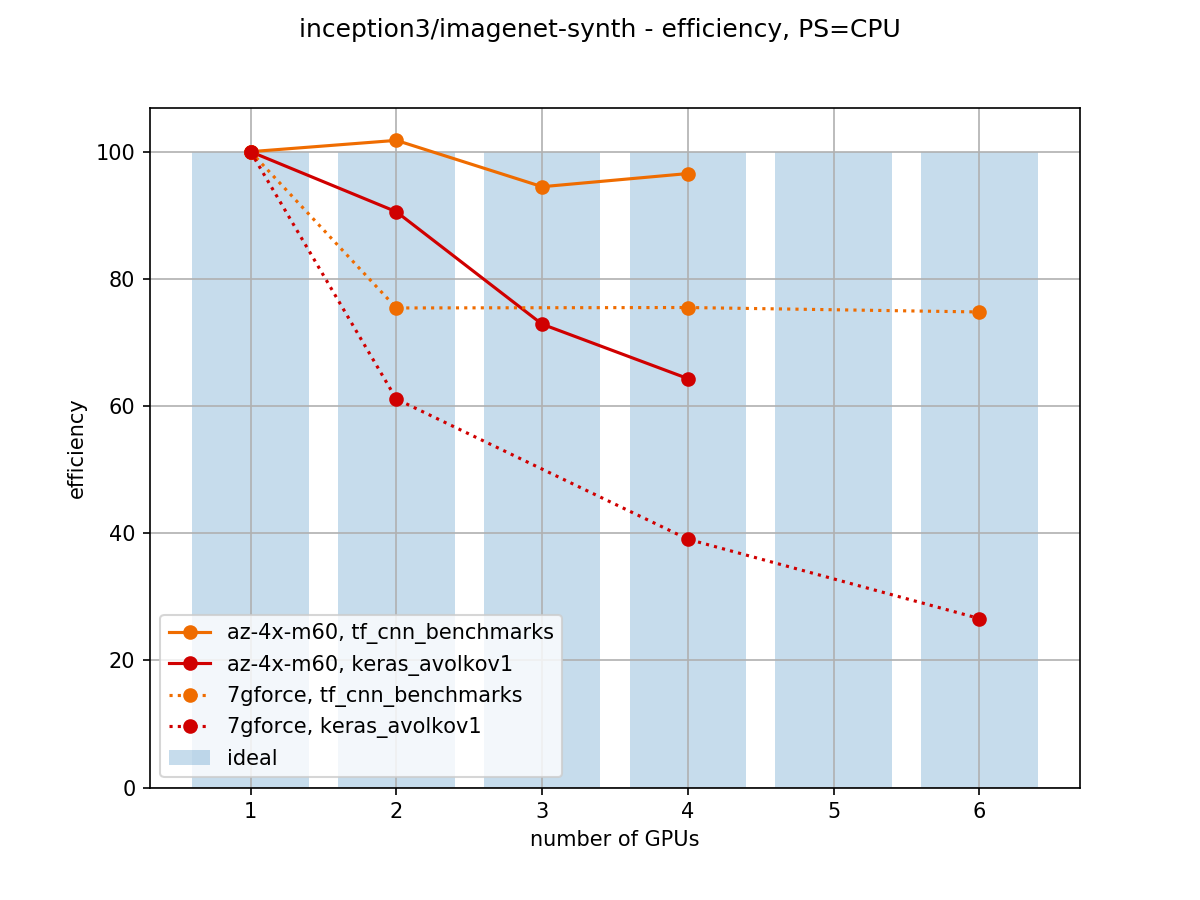

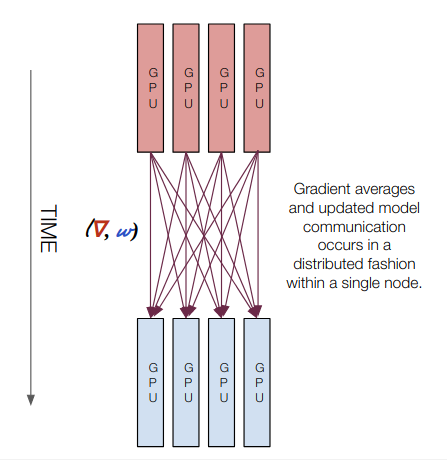

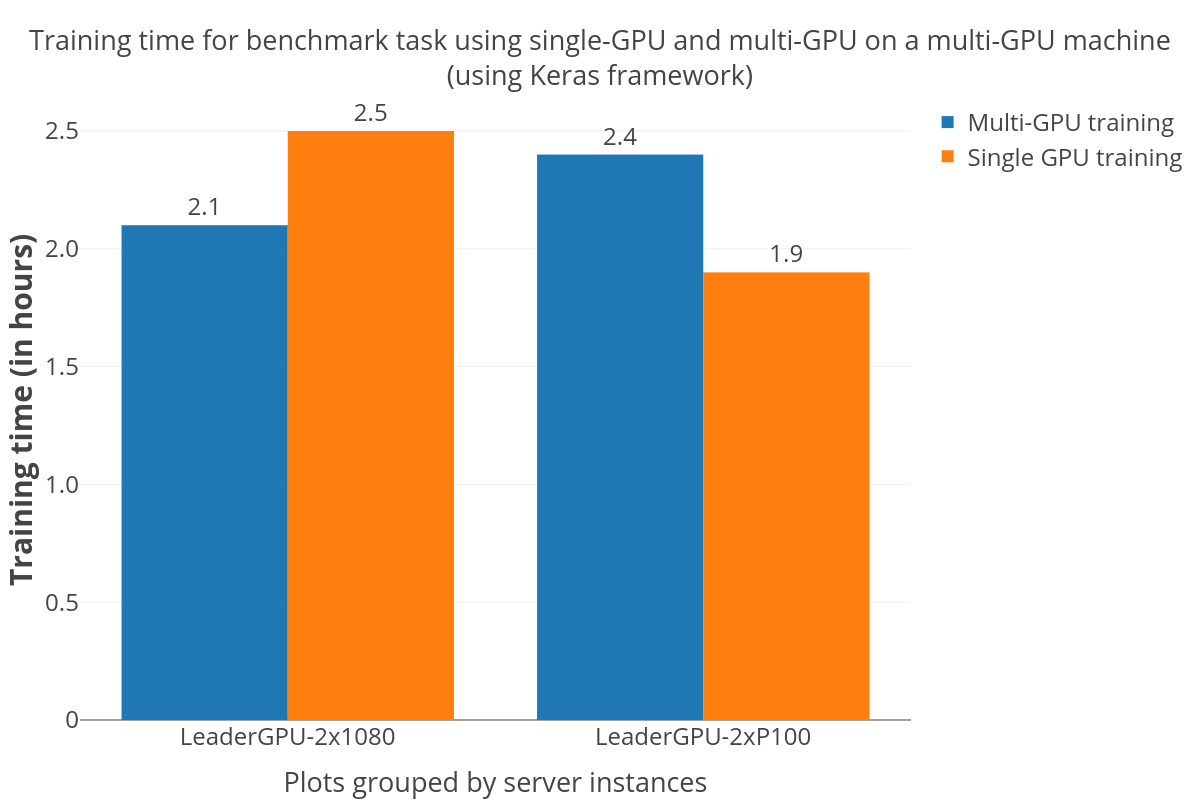

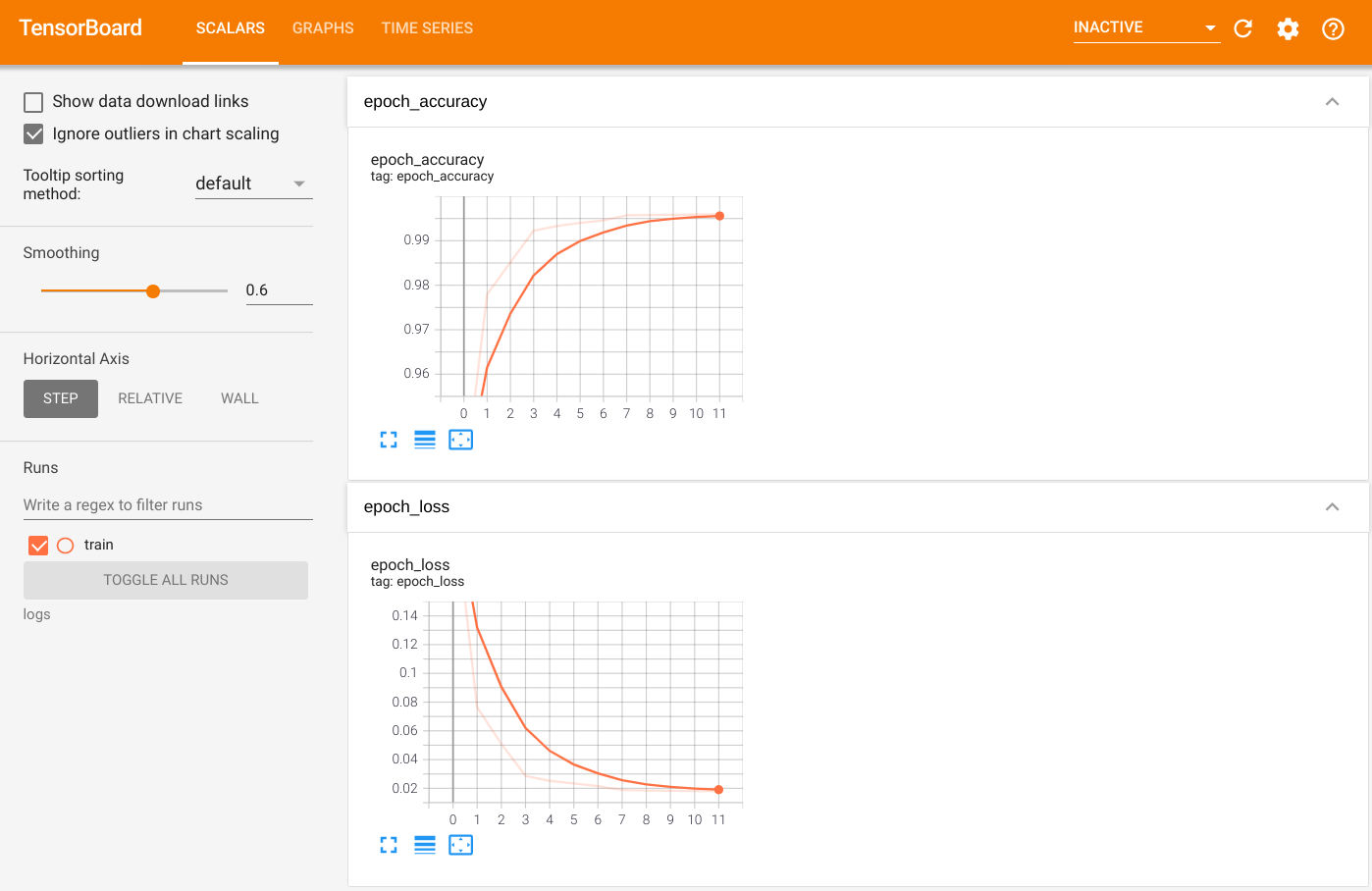

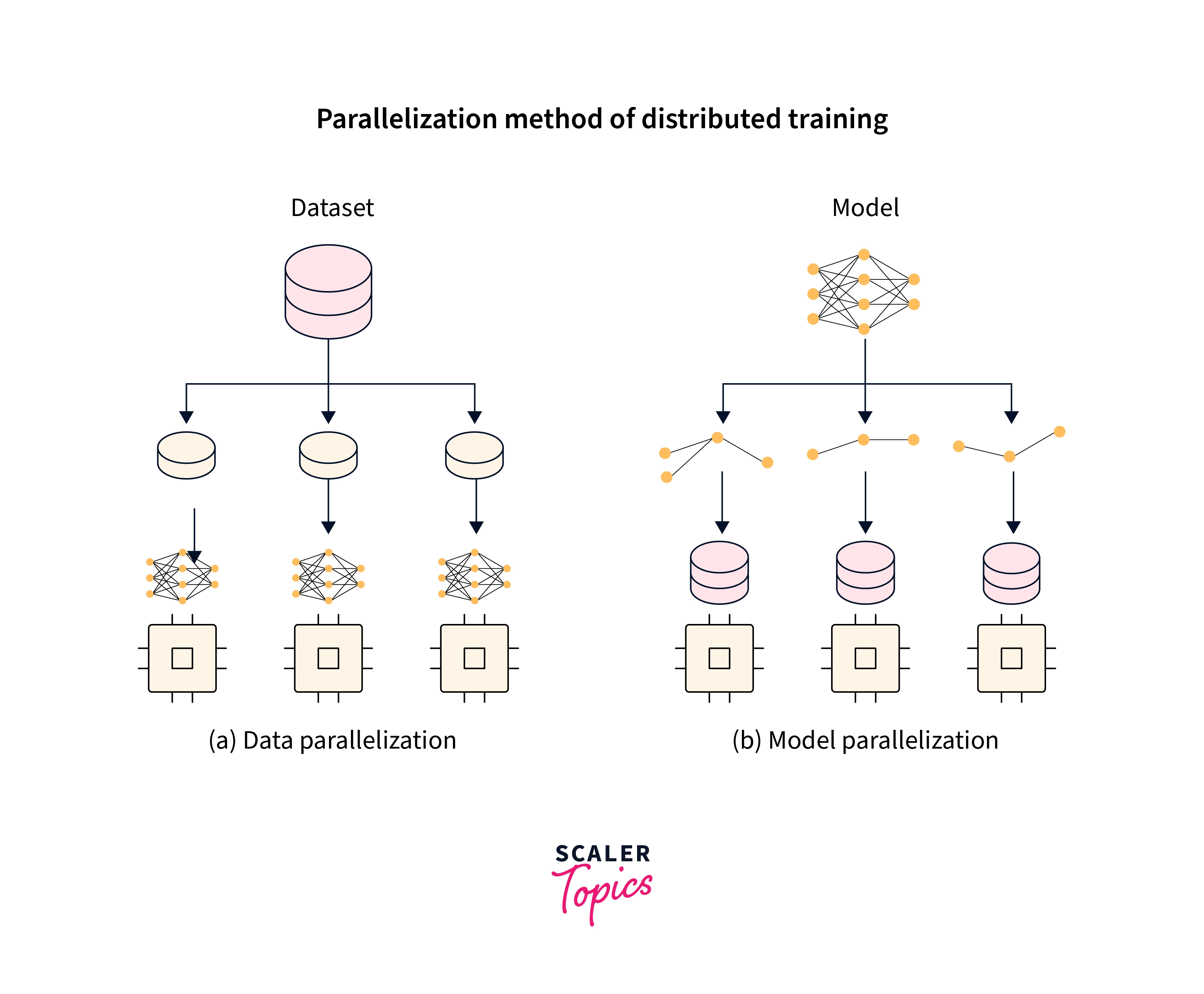

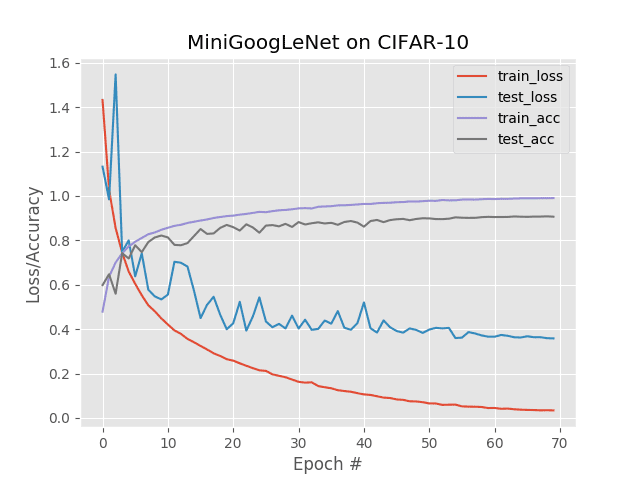

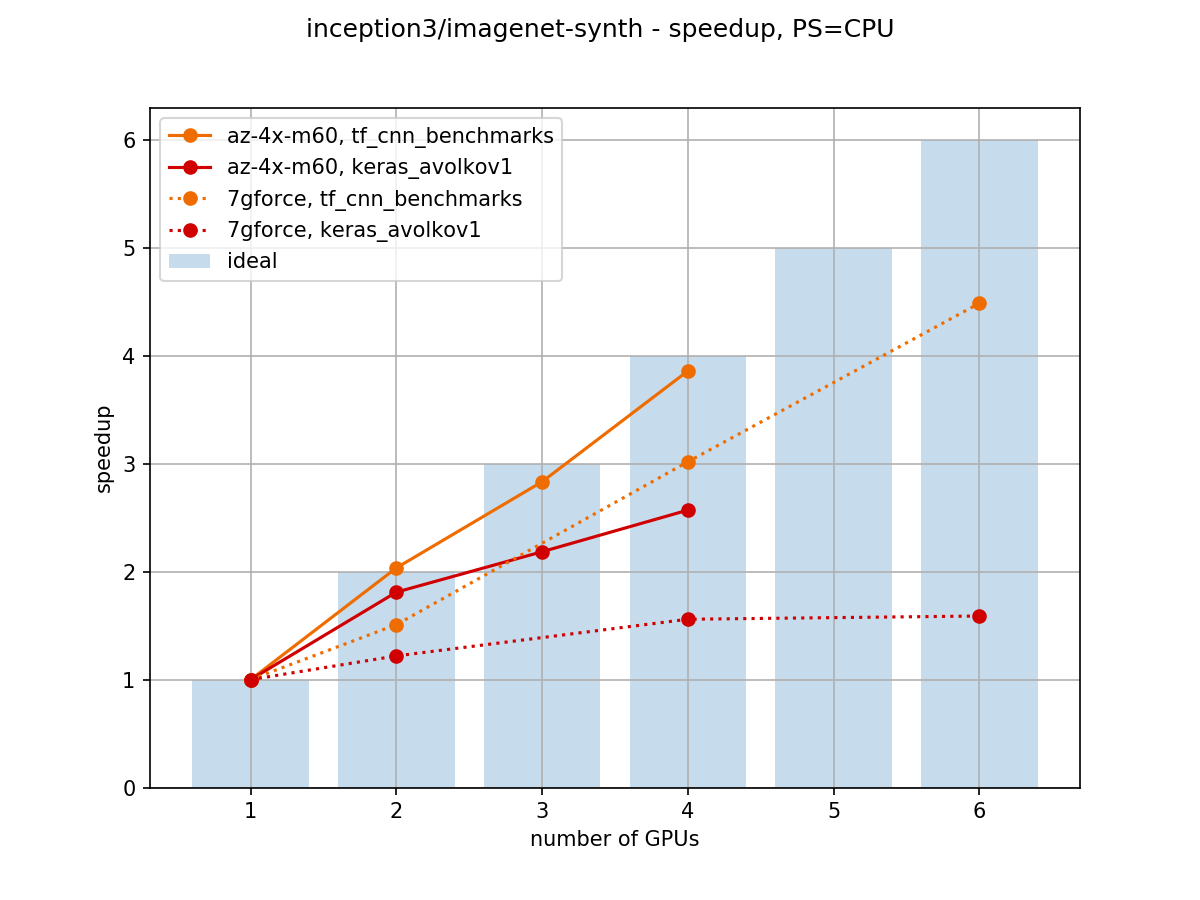

Towards Efficient Multi-GPU Training in Keras with TensorFlow | by Bohumír Zámečník | Rossum | Medium